Introduction

In this post I am going to talk about writing bash scripts. This is an important skill to have because it can help in saving time especially with repetitive tasks. Therefore, I am going to talk about what a bash script is and some use cases.

What is bash scripting?

In order to understand what a bash script is, one needs to understand what a script is.

A script is a written text of a play, film or broadcast.

In the context of a play, a script tells an actor what to say and do. An actor therefore does what the script tells him/her to do. This is the reason why we have terms such as manuscript or prescription. Using prescription as an example, these are instructions from a doctor that authorizes a patient to be issued with medicine or treatment. The patient only gets what is on the prescription. From these explanations we can see that there is no deviation from the script, what is on the script is what is done.

Now, bearing the explanation above in mind, a bash script is a file with written text intended for the bash shell and the written text is a series of commands. In other words, these commands will be telling bash shell what to do. The command, mkdir, for example, is a command used to make a directory and like any other command it can be used to compose a bash script.

If the bash file has the following, as an example,

#!/bin/bash

mkdir tutorial

its telling the bash shell to make a directory called tutorial. Therefore, the day to day commands you use on the terminal can be used to write a bash script. The commands you find in a bash script can be used on the terminal as well.

How to write and use bash scripts

Now that we have an understanding what a bash script is, the next thing we want to know is how to write and use bash scripts. In the context of Linux, the standard extension for bash script is .sh. If you therefore see a file with an extension .sh, it is a bash script or an executable file. When writing a bash script, the first line to write is:

#!/bin/bash

This indicates that you want to use bash shell to execute commands.

Little background

The character sequence consisting of the hash and exclamation mark (#!) is known as shebang. In the Unix/Linux world, the shebang is an interpreter directive. This is just a way of informing Unix/Linux which program is going to be used to execute the commands. Some examples of shebang are as follows:

#!/bin/sh – Execute the file using the Bourne shell, or a compatible shell, with path /bin/sh

#!/bin/bash – Execute the file using the Bash shell.

#!/bin/csh -f – Execute the file using csh, the C shell, or a compatible shell, and suppress the execution of the user’s .cshrc file on startup

#!/usr/bin/perl -T – Execute using Perl with the option for taint checks

#!/usr/bin/env python – Execute using Python by looking up the path to the Python interpreter automatically via env

#!/bin/false – Do nothing, but return a non-zero exit status, indicating failure. Used to prevent stand-alone execution of a script file intended for execution in a specific context, such as by the . command from sh/bash, source from csh/tcsh, or as a .profile, .cshrc, or .login file.

Now whatever follows after the shebang is the intent of the file, what is it that you want to accomplish when the file is executed. Using the above example again:

#!/bin/bash

mkdir tutorial

save the file as make_directory.sh. After saving the file, make it an executable file by changing the mode as follows chmod 755 make_directory.sh or chmod +x make_directory.sh. After changing the file into an executable file, you can run or execute it as follows ./make_directory.sh. The reason why we are doing it this way ./ is because by just running make_directory.sh it will not get executed. Bear in mind that in Linux “.” refers to the current directory and therefore by running it like ./make_directory.sh we are just saying “in this directory there is an executable file called make_directory.sh”, do something about it.

Use cases

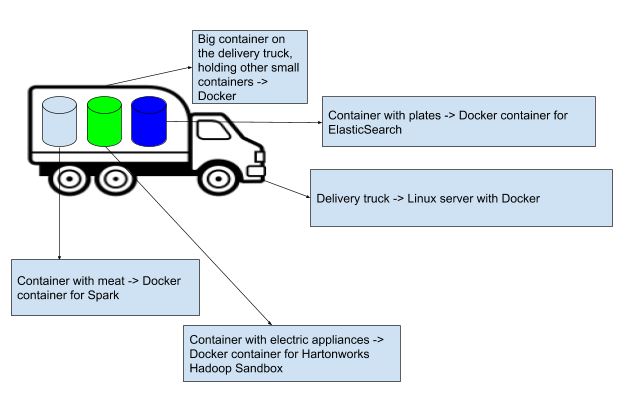

Making a directory is not all that can you can do with bash scripts. One use case of bash script is to automate repetitive tasks, such submitting a Spark job, connecting to Kubernetes pod or getting pods and so forth. I am going to add some of the scripts that I use on my github repository. Feel free to look through, add/subtract your are welcome to do it.

Conclusion

This was a high overview what a bash script is and a few examples of use cases. Bash scripts are becoming more and more important especially with the dawn of containerization. They assist in automating tasks and they can also be used to execute commands when a Docker container, as example, is started. In upcoming posts I will look at variables, functions, loops and so forth when writing bash scripts.